As I mentioned before the ASP.NET MVC5’s DefaultModelBinder has some quirks. The actual one I met some days ago is the following.

public class MyViewModel

{

public IEnumerable<int> IntList { get; set; }

}

What happens, when You call Your method with this JSON request?

{ IntList:[] }

I would like to find an empty IEnumerable<int> instance in IntList, but I will found null there.

Why? Because the DefaultModelBinder initializes my empty collection to null.

What You can do to avoid this is to write a custom model binder for this:

public class EmptyEnumerableCapableDefaultModelBinder:DefaultModelBinder

{

public override object BindModel(ControllerContext controllerContext, ModelBindingContext bindingContext)

{

object ret = base.BindModel(controllerContext, bindingContext);

WorkaroundEmptyEnumerablesInitializedToNullByDefaultModelBinder(ret, controllerContext, bindingContext);

return ret;

}

}

private void WorkaroundEmptyEnumerablesInitializedToNullByDefaultModelBinder(object model, ControllerContext controllerContext, ModelBindingContext bindingContext)

{

if (model != null)

{

// workaround case when there is an IEnumerable<> member and there is a "member":[] in request

// but default binder inits member to null

var candidateList = bindingContext.PropertyMetadata

.Where(kvp => bindingContext.PropertyFilter(kvp.Key))

.Where(kvp => TypeHelper.IsSubclassOf(kvp.Value.ModelType, typeof(IEnumerable<>)))

.Where(kvp => !bindingContext.ValueProvider.ContainsPrefix(kvp.Key)).ToArray();

if (candidateList.Any())

{

if (!controllerContext.HttpContext.Request.ContentType.StartsWith("application/json"))

{

throw new NotImplementedException(controllerContext.HttpContext.Request.ContentType);

}

var json = GetJSONRequestBody(controllerContext);

foreach(var candidate in candidateList)

{

var emptyEnumerablePattern = String.Format("\"{0}\":[],", candidate.Key);

if (json.Contains(emptyEnumerablePattern))

{

var pd = bindingContext.ModelType.GetProperty(candidate.Key);

var anEmptyArray = Array.CreateInstance(pd.PropertyType.GetGenericArguments()[0], 0);

pd.SetValue(model, anEmptyArray);

}

}

}

}

}

private string GetJSONRequestBody(ControllerContext controllerContext)

{

string ret = null;

var inputStream = controllerContext.HttpContext.Request.InputStream;

inputStream.Position = 0;

using (var sr = new StreamReader(inputStream, controllerContext.HttpContext.Request.ContentEncoding, false, 1024, true))

{

ret = sr.ReadToEnd();

}

return ret;

}

The point is to check the inputs on DefaultModelBinder’s null result whether it missed an empty enumerable on binding.

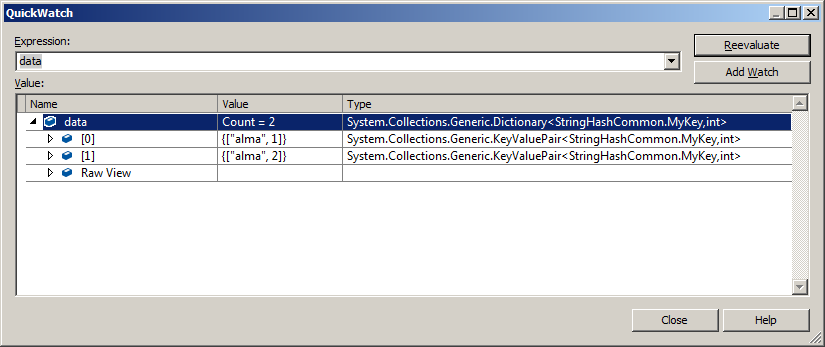

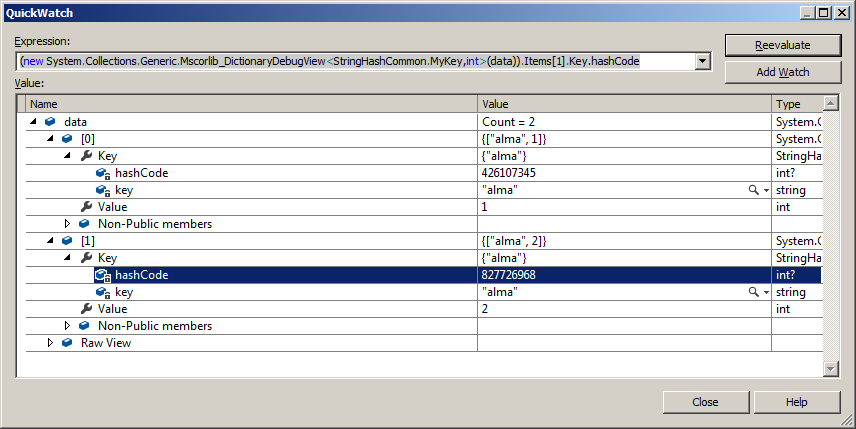

The valueProviders available in context are useless because they simply dont contain our IntList value. Instead I check our

target viewmodel for possible candidates and check their values directly in request. If an empty value found in request I

replace the binder result with an empty array instance which fits into IEnumerable<T> place.